Are AI Models Actually Getting Better?

Understanding the gap between test scores and real-life usefulness.

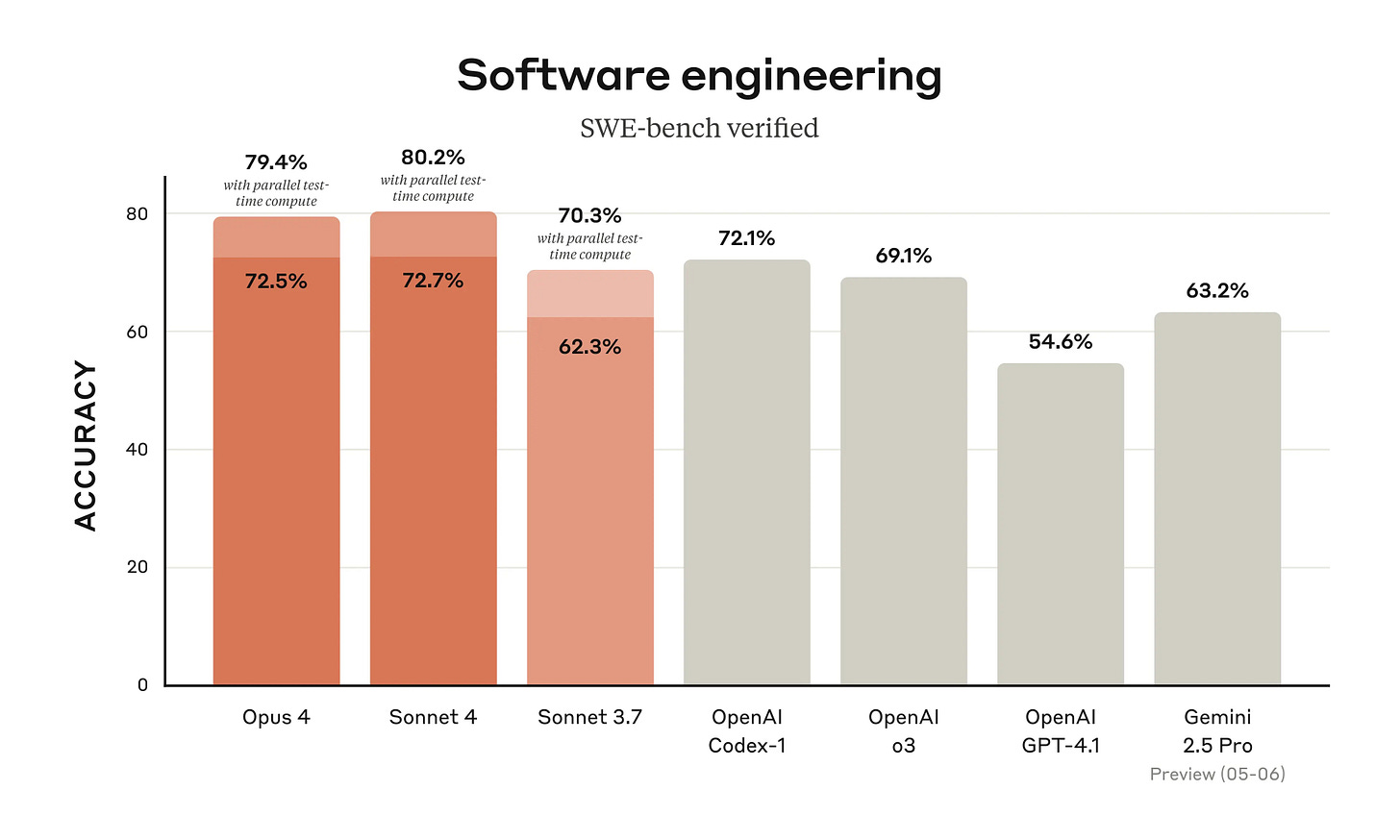

Last week, Anthropic rolled out its latest generation of AI models, Claude 4 Opus and Sonnet. The press release says these models "set new standards" for several important tasks. Well, what else are they going to say? "Our new AI model is like, pretty okay, slightly better than the last one, maybe?" No, of course companies are going to claim they just released the absolute best model ever to grace the earth.

It feels like we get one of these announcements every other week now. But does it really make a difference for our day-to-day use? When is it going to come up with the perfect excuse to explain to my manager why I'm late for the third time this week? I still remember the leap from GPT-3.5 to GPT-4. How it went from outputting repetitive nonsense half of the time to all of a sudden becoming mostly useful was truly impressive.

Though models are still allegedly getting better all the time, there hasn't been such a "wow" moment after that big jump. These AIs are like having a mediocre kid who's getting B's and C's at school, doesn't get into major trouble, and gets along generally well with others - nothing to complain about. But deep down, every so often you still hope he surprises you with some sort of hidden talent and becomes a quantum physics genius or at least plays division 1 football. Similarly, the AIs are OK at almost everything. They can get a solid 8 out of 10 for almost every task, but you still can't stop hoping for a 10/10 perfect answer (that never comes).

So, are the models actually getting better, like the nice folks at the AI labs are claiming?

The answer is yes. But definitely not in a transformative way like the leap from GPT-3.5 to GPT-4. See, AI labs use benchmarks to figure out if an AI model is getting better, which are basically standardized tests for AIs. And as former exam-takers ourselves, we know these test scores have a few limitations, to say the least:

Someone doing well in one subject does not mean she would do well in other subjects.

More importantly, getting a perfect score on the exam doesn't always translate to crushing it in the real world.

The same principles also apply to exams for AI. Scoring higher on a coding benchmark has no relevance to how well a model can draft your work email. And even if the improvement is on a relevant benchmark - let's say the "email composition" benchmark - there's still no guarantee that it will be able to actually write a better email for your specific use case.

Now we see there's a disconnect between improvements on benchmarks and improvements in real life, let's take a closer look at the mechanisms that caused this disconnect.

Why choose these "irrelevant" benchmarks?

Frontier models like Gemini 2.5 , OpenAI O3, and Claude 4 all claim to be the "most intelligent model yet" in their announcements. To back up these claims, by far the most popular metrics used are math and coding benchmark scores.

Why math and code?

The first explanation is human bias. Or more specifically, human bias from the AI researchers. Most of them have math or computer science backgrounds. To them, doing well in math and coding competitions has always been a symbol of high intelligence. Using common sense: it's a bunch of math and computer people who work on AI models, of course it's important for them to make the models good at math and coding. Jesus created mankind in his own image, so when AI researchers have a chance to create intelligence, they decided to create it in their own images as well.

Of course, it's not just due to a bunch of computer scientists and mathematicians trying to create enhanced digital versions of themselves. It's also affected by the technical designs of the models (read more about model architecture here).

A tenet in AI research is that intelligence comes from scaling. It means without radical changes in the model's architecture and algorithmic design, a model's capability (perceived intelligence) can be improved by adding more training data (read more about model training here), using more computing power, or using a larger model with more parameters. To put it simply, more is better for training AI models. Right now, efforts on scaling AI models can generally be put into two categories:

The Imitation Method (Supervised Learning): Find more example input-output pairs. You show the AI, "Here’s a question, and here’s the perfect answer." Over and over. It's like learning to make pasta by having a Michelin-starred chef hand you a plate of perfectly cooked cacio e pepe. You taste it, you see it, you know exactly what the end-state is supposed to be, and your job is to replicate that. A lot of the basic language understanding and world knowledge in these models comes from this process.

The Cranky Italian Uncle Method (Reinforcement Learning (RL)): When you can't find more input-output example pairs, it's also possible to only provide the inputs, and use a separate system (can be a set of rules or another model) to verify if the output is correct. This is like when you don't have the perfect plate of pasta. Instead, you just have a very opinionated, middle-aged Italian gentleman from Naples standing over your shoulder. He won’t tell you how to make the pasta, but he will definitely let you know when you mess up. You learn by trial and error, guided by his feedback, and eventually you'll make a perfect plate of pasta that makes him stop yelling.

For a while, the first method was king. AI labs scoured the earth for more data - more of the chef-made pasta in the first example - but data is running out. They've used almost every book ever written plus the entire internet, and new data can't be created fast enough to meet the training needs. So, what to do when you can't get a hold of the perfect plate of pasta?

You have to turn to the cranky Italian uncle instead. After leaning heavily on the first method through 2023 and 2024, AI labs are increasingly looking to RL to squeeze out more intelligence.

In the RL approach, having a reliable "critic" - like how you can always trust an Italian uncle to tell if a pasta is good - is crucial. So, what kinds of problems are the easiest to get that instant, verifiable feedback for? You guessed it: coding and math. Does the code compile and produce the desired output? Do the numbers add up in the math equations? The answers can be easily checked by the computer, requiring no human in the loop, making it very scalable to improve on these tasks.

And that, in large part, is why so much of the progress you see in AI is happening in the realms of coding and mathematics. Unfortunately, it just so happens that these are also areas where improvements might not be immediately obvious to the average person just trying to get the AI to write a less boring email.

Benchmarks ≠ Real Life

To be fair, AI labs are also working on benchmarks designed to test how well these models handle everyday conversational tasks. But, even when the scores go up on these benchmarks, it doesn’t always mean your AI assistant is about to get noticeably less weird or more helpful. Here's why.

People think that because AI researchers have PhDs and work on world-changing technologies, their psyche must be quite different from the rest of us “normal” folks. However, the vast majority of them have the same virtues and flaws in their characters as everyone else. Many AI researchers are used to being the smartest person in the room, so it’s only natural for them to possess quite large egos regarding their intelligence.

Inevitably, quantifiable metrics like benchmark scores are sometimes turned into a form of dick-measuring contest for those outliers on the right side of the intelligence spectrum. Nailing that top spot on a prominent benchmark is a badge of honor - a proof that your genius is the most genius out of all geniuses. More pragmatically, it also makes for a fantastic resume. "Led the team to achieve state-of-the-art on the GLUE benchmark" looks pretty damn good when you're looking for the next cushy job or trying to raise funds for your startup. These ulterior incentives have created an obsession with benchmark scores, distracting the researchers from improving the model's usefulness in real life.

However, beyond the issues stemming from human nature, there are some technical reasons why a great benchmark score might not mean what you think it means.

The first problem is data contamination. Imagine you’re about to take a final exam. If your professor accidentally emailed you the exact exam questions and answers a week before, your perfect score wouldn’t exactly be a testament to your deep understanding of macroeconomics. Same goes for AI models. Often, the training dataset is not made public, so there's no way to know for certain whether benchmark questions are included in the training data. However, given the scale of training datasets (estimated to include over 10 trillion words/tokens), it's quite likely that some questions in benchmarks are already included in the training data. So if the AI has already "seen the answers" during training, the test scores won't tell you much anymore.

Then there’s overfitting. Going back to the exam analogy, let's say you're taking an exam on the NBA in the 2000s, and you know Professor Rivers, who is a huge Lakers fan, would be writing the exam. So you only studied the Lakers, from how Shaq spent his first paycheck to memorizing every cheerleader's name. In the end, you might ace the test, but would you actually say you have a good understanding of the NBA in the 2000s? Probably not. You've "overfitted" your knowledge to the Lakers.

AI models can do this too. They can be tuned to perform exceptionally well on the specific criteria of a particular benchmark. They learn what the benchmark "likes" and give it more of that. The result? A fantastic score on that benchmark, but potentially worse performance on everything else.

We saw this in the recent controversy with Meta’s Llama 4 models. It was believed that to attain a high rank on the LMArena benchmark (which uses human preferences to rate model outputs), Meta submitted a "customized model optimized for human preference" specifically for that benchmark. The implication being, this specially-tuned model is likely worse at other tasks precisely because it was so aggressively optimized for that one contest. This also goes to show how far companies are willing to go to chase that ranking on the leaderboard.

What This Means

AI models are getting better, more so in some areas than others. Knowing how and why they've been improving along the current trajectory, and the limitations of these proclaimed improvements, here are a few things you can do to make sure you're using the AI that best serves your needs.

Most importantly, benchmark scores are not real life - this should probably be carved into the walls of every executive suite in the AI era. When the next AI vendor comes calling, waving a shiny report proclaiming their customer service bot is "#1 on the Grand Unified Chatbot Eloquence Test" (or whatever impressive-sounding name they’ve cooked up), your first reaction should be to see that as an irrelevant vanity metric.

The crucial signal for whether an AI model is any good for you has to come from within your company, not a vendor's slide deck or a university lab's leaderboard. No one who's selling you an AI solution knows the particular challenges and unique needs of your business the way those in your company do. So, before getting on board with any AI solution, it's critical to test it thoroughly in an environment that's as close to the real production environment as possible. Only trust your own results.

Ideally, testing and collecting data on AI performance within your company should not be one-off. Instead, it's best to have a proper test-pilot-deploy feedback loop, where you're constantly gathering data on how well the AI is actually doing. This framework allows for continuous experimentation for potential improvements while maintaining stability for the majority of the organization.

To summarize, AI performance is not static. It varies wildly depending on the specific task and context you throw at it. So, before betting the house on the latest AI to work a miracle on your business, make sure to test it in an environment that closely mirrors the real use case. Only then can you make an informed decision on whether this specific AI solution will work for you.

Here at In & Out AI, I write about AI headlines using plain English, with clear explanations and actionable insights for business and product leaders. Subscribe today to get future editions delivered to your inbox!

If you like this post, please consider sharing it with friends and colleagues who might also benefit - it’s the best way to support this newsletter!

Interesting insight shared.